CBMM Workshop on

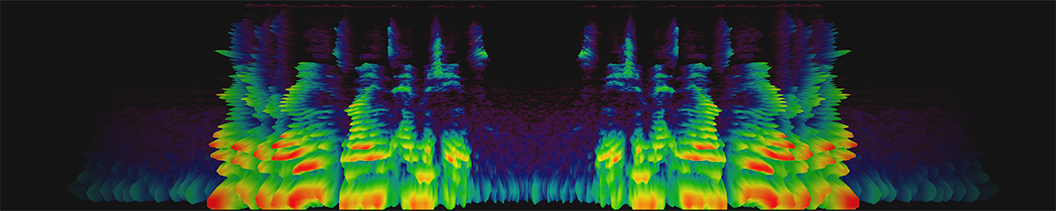

Speech Representation, Perception and Recognition

Thursday, Feb. 02 - Friday, Feb. 03, 2017

MIT Brain and Cognitive Sciences Complex (Building 46), Singleton Auditorium (46-3002)

The 2-day workshop on “Speech Representation, Perception and Recognition” is organized by the Center for Brains Minds and Machines (CBMM) and will feature invited talks from leading speech researchers as well as focused discussion sessions. The focus of the workshop is on the computations and learning involved in human speech understanding that are required for speech-enabled machines, following CBMM’s mission to understand intelligence in brains and replicate it in machines. The workshop will bring together experts in the fields of neuroscience, perception, development, machine learning, automatic speech recognition and speech synthesis.

Topics:

-

Speech Neuroscience

-

Speech and Voice Perception

-

Development and Language

-

Machine-based Speech Recognition

-

Speech Synthesis

Participation:

By invitation and open to limited number of attendees through RSVP.

Format and registration:

Two full-day workshop with 16 invited talks, from academia and industry, and scheduled discussion time. A limited number of attendee spots will be available through RSVPs on a first-come basis.

Schedule:

Thu., Feb. 02

Morning:

08:30 Coffee and light breakfast

08:50 Introductory Remarks

09:00 Talk: Keith Johnson (UC Berkeley)

09:45 Talk: Abdelrahman Mohamed (Microsoft Research)

10:30 Coffee Break

11:00 Talk: Edward Chang (UC San Francisco)

11:45 Talk: Sophie Scott (UCL)

12:30 Lunch

Afternoon:

13:30 Talk: David Poeppel (Max-Planck-Institute and NYU)

14:15 Talk: Karen Livescu (TTI)

15:00 Coffee Break

15:30 Talk: Bob McMurray (U. Iowa)

16:15 Talk: Georgios Evangelopoulos (CBMM, MIT)

17:00 Moderated Discussion

18:30 Dinner (Catalyst) - by invitation

Fr., Feb. 03

Morning:

08:30 Coffee and light breakfast

09:00 Talk: Yoshua Bengio (U. Montreal)

09:45 Talk: Heiga Zen (Google)

10:30 Coffee Break

11:00 Talk: Josh McDermott (CBMM, MIT)

11:45 Talk: Matt Davis (MRC Cognition and Brain Sciences Unit)

12:30 Lunch

Afternoon:

13:30 Talk: Jim Glass (MIT)

14:15 Talk: Emmanuel Dupoux (Ecole Normale Superieure, EHESS, CNRS)

15:00 Coffee Break

15:30 Talk: Naomi Feldman (U. Maryland)

16:15 Talk: Brian Kingsbury (IBM)

17:00 Moderated Discussion

18:10 Closing Remarks

18:30 Reception (Building 46 Atrium)

Speakers:

Titles and abstracts:

Yoshua Bengio, U. Montreal

Title: Deep Generative Models for Speech and Images

Abstract: TBA

Eddie Chang, UCSF

Title: The dual stream in human auditory cortex

Abstract: In this talk, I will discuss parallel information processing of speech sounds in two dominant streams throughout the entire human auditory cortex.

Matt Davis, MRC Cognition and Brain Sciences Unit, Cambridge, UK

Title: Predicting and perceiving degraded speech

Abstract: Human listeners are particularly good at perceiving ambiguous and degraded speech signals. In this talk I will show how listeners use prior predictions to guide immediate perception and longer-term perceptual learning of artificially degraded (noise-vocoded) speech. Our behavioural, MEG/EEG and fMRI observations are uniquely explained by computational simulations that use predictive coding mechanisms.

Emmanuel Dupoux, (Ecole Normale Superieure / EHESS / CNRS, France)

Title: Can machine learning help to understand infant learning?

Abstract: TBA

Georgios Evangelopoulos, (CBMM, MIT)

Title: Learning with Symmetry and Invariance for Speech Perception

Abstract: TBA

Naomi Feldman, U. Maryland

Title: A framework for evaluating speech representations

Abstract: Representation learning can improve ASR performance substantially by creating a better feature space. Do human listeners also engage in representation learning, and if so, what does this learning process consist of? To investigate human representation learning, we need a way to evaluate how closely a feature space corresponds with human perception. I argue that we should be using cognitive models to measure this correspondence, and give an example of how we can do so using a cognitive model of discrimination. This is joint work with Caitlin Richter, Harini Salgado, and Aren Jansen.

Jim Glass, MIT

Title: Unsupervised Learning of Spoken Language with Visual Context

Abstract: Despite continuous advances over many decades, automatic speech recognition remains fundamentally a supervised learning scenario that requires large quantities of annotated training data to achieve good performance. This requirement is arguably the major reason that less than 2% of the worlds languages have achieved some form of ASR capability. Such a learning scenario also stands in stark contrast to the way that humans learn language, which inspires us to consider approaches that involve more learning and less supervision. In our recent research towards unsupervised learning of spoken language, we are investigating the role that visual contextual information can play in learning word-like units from unannotated speech. In this talk, I describe our recent efforts to learn an audio-visual embedding space using a deep learning model that associate images with corresponding spoken descriptions. Through experimental evaluation and analysis we show that the model is able to learn a useful word-like embedding representation that can be used to cluster visual objects and their spoken instantiation.

Keith Johnson, UC Berkeley

Title: Speech Representations and Speech Tasks

Abstract: At one point in my research career I was interested in finding “the” representation of speech. But clearly, there is no one level of speech representation. Researchers find it useful to represent speech in a variety of ways. Linguists and phoneticians use phonemes, distinctive features, gestures, and locus equations. Engineers use MFCCs, triphones, and delta features. Psychoacousticians use STRFs, and modulation spectra. This is all well and good for analysts - we have different speech tasks, need to find answers to different kinds of questions, so naturally we will use different ways to represent speech. But, do listeners also also have different tasks to accomplish with speech, and are these different tasks accomplished by reference to different representations? The answer is yes. This talk will discuss some of the speech tasks that listeners face, and the evidence that listeners use different representations in support of these tasks.

Brian Kingsbury, IBM

Title: Multilingual representations for low-resource speech processing

Abstract: A key to achieving good automatic speech recognition performance has been the availability of vast amounts of labeled and unlabeled speech and text data that can be used to train speech models; however, there are thousands of languages in the world that we would like to process automatically and it is impractical to count on having access to thousands of hours of speech and billions of words of text in all of them. In this talk I will describe how multilingual speech representations learned from a variety of languages can reduce the amount of data needed to train a speech recognition system in a new language.

Karen Livescu, TTI

Title: Acoustic word embeddings

Abstract: For a number of speech tasks, it can be useful to represent speech segments of arbitrary length by fixed-dimensional vectors, or embeddings. In particular, vectors representing word segments -- acoustic word embeddings -- can be used in query-by-example tasks, example-based speech recognition, or spoken term discovery. *Textual* word embeddings have been common in natural language processing for a number of years now; the acoustic analogue is only recently starting to be explored. This talk will present our work on acoustic word embeddings, including a variety of models in unsupervised, weakly supervised, and supervised settings.

Josh McDermott, MIT

Title: Hierarchical Processing in Human Auditory Cortex

Abstract: TBA

Bob McMurray, U. Iowa

Title: A data explanatory account of speech perception (and its limits)

Abstract: One of the most challenging aspects of speech perception is the rampant variability in the signal. One consequence of this variability is that purely bottom up approaches to categorizing phonemes have not consistently been successful. Top down accounts--analogous to predictive coding in vision--may offer more leverage. Drawing on phonetic analyses, computational models and behavioral experiments I suggest that if listeners recode incoming acoustic information relative to expectations, this variability can largely be explained away, allowing for much more robust categorization. Predicts of this account are tested with ERP studies and studies of anticipatory processing. But, this account may also have limits. Eye-tracking studies on the timecourse of cue integration, and behavioral studies of categorization suggest phenomena that do not fall neatly under this rubric.

Abdelrahman Mohamed, Microsoft Research

Title: The promise of ASR: where we stand and what is still missing

Abstract: In the past decade, the ASR technology made a huge leap forward in terms of word recognition accuracy, leading to the recent announcement of Microsoft of achieving human parity in conversational speech. In this talk, I will reflect on the recent advances in Neural Network models for acoustic models with special interest in understanding the relation between different models. I will also discuss many outstanding research directions to achieve the promise of conversational systems.

David Poeppel, Max-Planck-Institute and NYU

Title: Entrainment, segmentation, and decoding: three necessary computations for speech comprehension

Abstract: Neurophysiological experiments demonstrate that auditory cortical activity entrains to continuous speech. This entrainment, building on neural oscillations, underlies segmentation, and the segmented chunks in turn form the basis for decoding. To make contact with the stored representations (roughly, words) that form the basis of recognition, these operations are all necessary.

Sophie Scott, UCL

Title: The role(s) of hemispheric asymmetries and streams of processing in speech perception.

Abstract: I will talk about the potential for different perceptual representations of the speech signal and their relationship to anatomical and task based factors.

Heiga Zen, Google

Title: Generative Model-Based Text-to-Speech Synthesis

Abstract: Recent progress in generative modeling has improved the naturalness of synthesized speech significantly. In this talk I will summarize these generative model-based approaches for speech synthesis and describe possible future directions.

Organizing committee:

Georgios Evangelopoulos (CBMM, MIT), Josh McDermott (CBMM, MIT)

LEARNING

LEARNING