Projects: Neural Circuits for Intelligence

Feedforward circuits have been shown to be very powerful as models of vision. However, these architectures are apparently incapable of dealing with many visual tasks that the human visual system finds simple, such as identifying occluded figures. Data from the Allen Institute for Brain Science Cortical Activity Map (CAM) project may give us the opportunity to explore this question. What is the functional connectivity of cells in mouse V1 ...

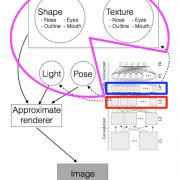

The spiking patterns of the neurons at different fMRI-identified face patches show a hierarchical organization of selectivity for faces in the macaque brain: neurons at the most posterior patch (ML/MF) appear to be tuned to specific view points, AL (a more anterior patch) neurons exhibit specificity to mirror-symmetric view points, and the most anterior patch (AM) appear to be largely view-invariant, i.e., neurons there show specificity to individuals (Freiwald & Tsao, 2010).

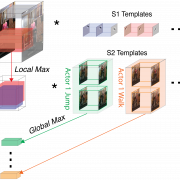

Recognizing actions from dynamic visual input is an important component of social and scene understanding. The majority of visual neuroscience studies, however, focus on recognizing static objects in artificial settings. Here we apply magnetoencaphalography (MEG) decoding and a computational model to a novel data set of natural movies to provide a mechanistic explanation of invariant action recognition in human visual cortex.

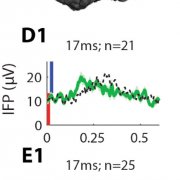

We take advantage of a rare opportunity to interrogate the neural signals underlying language processing in the human brain by invasively recording field potentials from the human cortex in epileptic patients. These signals provide high spatial and temporal resolution and therefore are ideally suited to investigate language processing, a question that is difficult to study in animal models.

We are combining freely moving animal behavior (left), massively parallel recordings (middle), and real-time decoding of ensemble firing patterns to investigate and manipulate memory formation as it occurs.

The ability to extrapolate and make inferences from partial information is a central component of intelligence and manifests itself in all cognitive domains including language, vision, planning, and learning. This project aims to elucidate the computational mechanisms responsible for pattern completion by combining neurophysiological recordings, behavioral measurements and theoretical modeling. We focus on the problem of visual object completion, which is essential for the Center’s challenge of “What/Who is there?”.

The hippocampus and associated brain structures are known to simultaneously represent a subject’s ongoing location, directional heading and movement-related correlates during active locomotion. Of these areas, the hippocampus appears to play a central role in navigation because, at fine temporal scales, its spatial representations also predict immediate upcoming locations much like a neuronal GPS.

This project aims to use naturalistic dynamic stimuli in movies to study selectivity and invariance during visual recognition. The questions include “What/who is there?”, “What is the person doing?”, “What is where?”. These efforts combine computational modeling to evaluate biologically plausible algorithms and physiological recordings to investigate the neural circuits along the ventral visual stream involved in visual recognition for dynamic and complex stimuli.

Given an image, there is essentially an infinite number of questions that could be posed, all of which a human can answer effortlessly. This naturally leads to the question, is the visual representation of an image fixed regardless of the task at hand? We are probing this question by combining visual psychophysics, invasive neurophysiological recordings in humans, and computational modeling. Our experimental paradigm consists of presenting images to patients undergoing surgery for epilepsy and asking a series of distinct questions. By understanding the brain's capability to perform this task, we hope to inform computational models that can accomplish the core CBMM Challenge.

Visual recognition takes a small fraction of a second and relies on the cascade of signals along the ventral visual stream. Given the rapid path through multiple processing steps between photoreceptors and higher visual areas, information must progress from stage to stage very quickly. This rapid progression of information suggests that fine temporal details of the neural response may be important to the brain’s encoding of visual signals.

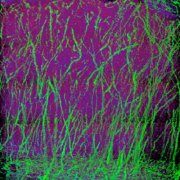

The circuits that generate intelligent behaviors are large, 3-D structures that operate with millisecond timescale precision and are organized at the nanoscale. We are working on new technologies, including novel methods of super-resolution light microscopy that can image large 3-D structures with nanoscale precision, novel methods of fast imaging of neural dynamics in the living brain, novel microfabricated electrode arrays that can record electrical activity of many neurons at once in the living mammalian and perhaps even human brain, and new ways to activate and silence the activity of neurons throughout neural networks with pulses of light.

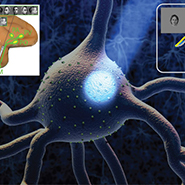

Abstract thinking and complex problem solving constitute paradigmatic examples of computation emerging from interconnected neuronal circuits. The biological hardware represents the output of millions of years of evolution leading to neuronal circuits that provide fast, efficient, and fault-tolerant solutions to complex problems. Progress toward a quantitative understanding of emergent intelligent computations in cortical circuits faces several empirical challenges (e.g., simultaneous recording and analysis of large ensembles of neurons and their interactions), and theoretical challenges (e.g., mathematical synthesis and modeling of the neuronal ensemble activity). Our team of theoreticians and neurophysiologists is focused on systematic, novel, and integrative approaches to deciphering the neuronal circuits underlying intelligence. Understanding neuronal circuits that implement solutions to complex challenges is an essential part of scientific reductionism, leading to insights useful for developing intelligent machines.

Abstract thinking and complex problem solving constitute paradigmatic examples of computation emerging from interconnected neuronal circuits. The biological hardware represents the output of millions of years of evolution leading to neuronal circuits that provide fast, efficient, and fault-tolerant solutions to complex problems. Progress toward a quantitative understanding of emergent intelligent computations in cortical circuits faces several empirical challenges (e.g., simultaneous recording and analysis of large ensembles of neurons and their interactions), and theoretical challenges (e.g., mathematical synthesis and modeling of the neuronal ensemble activity). Our team of theoreticians and neurophysiologists is focused on systematic, novel, and integrative approaches to deciphering the neuronal circuits underlying intelligence. Understanding neuronal circuits that implement solutions to complex challenges is an essential part of scientific reductionism, leading to insights useful for developing intelligent machines.