Projects: Vision and Language

Children learn to describe what they see through visual observation while overhearing incomplete linguistic descriptions of events and properties of objects. We have in the past made progress on the problem of learning the meaning of some words from visual observation and are now extending this work in several ways. This prior work required that the descriptions be unambiguous, meaning that they have a single syntactic parse.

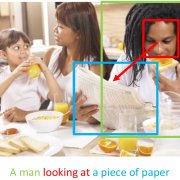

We have constructed techniques for describing videos with natural language sentences. Building on this work, we are going beyond description to answering questions such as: What is the person on the left doing with the blue object? This work takes as input a natural-language question and produces a natural-language answer.

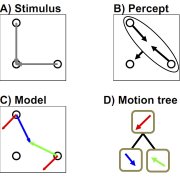

This project explores a Bayesian theory of vector analysis for hierarchical motion perception. The theory takes a step towards understanding how moving scenes are parsed into objects.

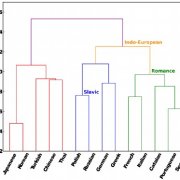

We are developing a computational framework for modeling language typology and understanding its role in second language acquisition. In particular, we are studying the cognitive and linguistic characteristics of cross-linguistic structure transfer by investigating the relations between the speakers’ native language properties and their usage patterns and mistakes in English.

We are constructing detectors which can determine gaze direction in 3D and to apply these detectors to model human-human and human-object interactions. We aim to predict the intentions and goals of the agents by utilizing their direction of gaze, head pose, body pose, and the spatial relations between agents and objects.

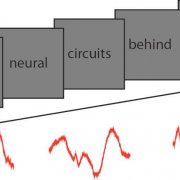

We take advantage of a rare opportunity to interrogate the neural signals underlying language processing in the human brain by invasively recording field potentials from the human cortex in epileptic patients. These signals provide high spatial and temporal resolution and therefore are ideally suited to investigate language processing, a question that is difficult to study in animal models.

Existing approaches to labeling images and videos with natural-language sentences generate either one sentence or a collection of unrelated sentences. Humans, however, produce a coherent set of sentences, which reference each other and describe the salient activities and relationships being depicted.

Many of the most interesting and salient activities that humans perform involve manipulating objects, which frequently involves using hands to grasp and move objects. Unfortunately, object detectors tend to fail at precisely this critical juncture where the salient part of the activity occurs because the hand occludes the object being grasped. At the same time, while a hand is manipulating an object, the hand is being significantly deformed making it more difficult to recognize.

Current hierarchical models of object recognition lack the non-uniform resolution of the retina, and they also typically neglect scale. We show that these two issues are intimately related, leading to several predictions. We further conjecture that the retinal resolution function may represent an optimal input shape, in space and scale, for a single feed-forward pass. The resulting outputs may encode structural information useful for other aspects of the CBMM challenge, beyond the recognition of single objects.

We are studying human visual processing using image patches that are minimal recognizable configurations (MiRC) of larger images. Although they represent only a small portion of the image, the majority of people can recognize MiRC images, while any cropping or down-sampling severely hurts recognition performance.

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.