Sensory systems encode natural scenes with representations of increasing complexity. In vision, edges encoded in the early visual cortex are combined by downstream regions into geometric primitives, shapes and ultimately percepts. This view is supported by experimental results and a number of computational models. In audition, on the other hand, very little is known about analogous grouping principles for mid-level representations of natural sound.

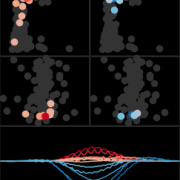

In this project we attempt to understand structures of intermediate complexity occurring in natural acoustic scenes. We are developing a hierarchical, generative model of sound which learns combinations of basic spectrotemporal features, similar to receptive fields of neurons in the early auditory system. We seek to develop intuitions about statistical regularities of the natural acoustic environment and to predict which patterns are encoded in higher auditory regions in the brain. Our model will hopefully also find applications in sound processing technology.