LH - Modeling & Data Analysis Tools & Datasets

- Decoding Neural Data | Probabilistic Models of Cognition |

- Analyzing fMRI Data | Decoding Hippocampal Place Cell Data |

- TELLab: The Experiential Learning Lab |

- Show My Data: Data Visualization Web Apps |

- Language and Vision Ambiguities (LAVA) Corpus |

- Treebank of Learning English (TLE) |

- iCub Image Dataset |

- Kreiman Lab Repository

Do you have additional supporting material related to the resources below, or a new software tool, modeling code, or dataset that others may find useful in an educational context? Please contact us through the feedback form with more information.

Modeling & Data Analysis Tools & Datasets

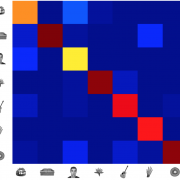

The Neural Decoding Toolbox enables researchers to analyze neural data from sources such as single cell recordings, fMRI, MEG, and EEG, to understand the information contained in this data and how it is encoded and decoded in the brain. Learn about neural decoding methods, download the toolbox and sample datasets, and run examples in MATLAB or R.

Resources to support the implementation and testing of probabilistic models and inference methods, including an interactive electronic text with an embedded programming environment, a web-based probabilistic programming language, working model implementations, and sample datasets.

Learn about basic methods for fMRI data analysis and explore software tools in MATLAB and C for implementing some of these methods, with sample datasets. Includes a MATLAB based lab activity that requires minimal programming experience.

A place cell is a type of pyramidal neuron in the hippocampus that modulates its activity when an animal enters a particular place in its environment. Download a dataset of neural recordings from place cells in the hippocampus of a rat that is moving along a linear track, obtained from Matt Wilson’s lab. Explore a lab activity in MATLAB to recover the animal’s trajectory from the neural activity, using multiple estimation techniques.

TELLab is an online tool for collaborative learning in the design of psychology experiments, developed by a group of psychologists, computer scientists, and educators at Harvard University and other schools. Learn about this resource and see examples of how it was used in introductory psychology courses at Wellesley and Princeton.

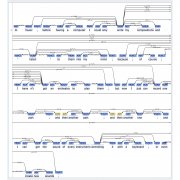

Access simple, elegant, and powerful data visualization tools in the form of free, easy-to-use web apps, for use in research and teaching. The apps can handle many types of data, and high-resolution visualizations can be downloaded for presentations and publications.

ThreeDWorld (TDW) is a general-purpose virtual world simulation platform that supports multi-modal physical interactions between mobile agents and objects in a wide variety of rich 3D environments, with realtime, near photo-realistic image rendering quality. Initial experiments enabled by the platform explore the transfer of visual and sound recognition capabilities from the virtual to real world and testing of models of physical scene understanding and social interactions. Lead Developer Jeremy Schwartz provides a tutorial of the TDW platform in this video.

Human language understanding relies critically on the ability to obtain unambiguous representations of linguistic content. The LAVA corpus contains ambiguous English sentences, annotated with syntactic and semantic parses, coupled with visual scenes that depict the different interpretations of each sentence, enabling their disambiguation.

The Treebank of Learner English is a collection of over 5,000 English as a Second Language (ESL) sentences annotated with parts of speech and syntactic dependency trees, representing upper-intermediate level adult English learners from 10 native language backgrounds. The treebank supports linguistic and computational research and education on language learning and automatic processing of ungrammatical language.

Collections of images of everyday objects obtained with the iCub robot as it observes its environment, to support the evaluation of object recognition models. Learn about current research by the iCub team and access the iCub project site to download the image datasets.

An extensive collection of code, data, and databases produced by the Kreiman Lab, headed by Gabriel Kreiman at Harvard, Harvard Medical School, and Boston Children's Hospital. Explore, for example, behavioral data and modeling code from a study by Zhang et al. (2018) that presents a computational model of invariant visual search in complex natural scenes, inspired by human visual search performance. Please read the licensing agreement located on the Kreiman Lab website before using any resources.